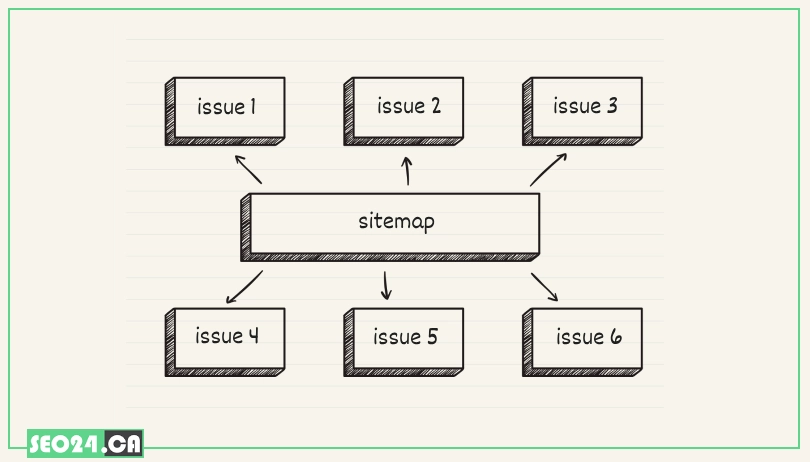

A Complete Guide to Common Sitemap Errors and How to Fix Them

Sitemap issues can silently undermine your website’s SEO by preventing search engines from properly crawling and indexing your pages. Understanding these common problems and knowing how to troubleshoot them is crucial for maintaining strong search visibility. This guide will help you identify, fix, and prevent sitemap issues to ensure your site performs at its best in search results.

Table of Contents

Sitemap Not Found/Accessible (HTTP Errors)

Problem Description: One of the most common sitemap issues is when your sitemap cannot be found or accessed due to HTTP errors. In this case, search engines like Google may fail to discover and index your website content properly. This issue can prevent crawlers from understanding your site structure, leading to missed indexing opportunities and lower visibility in search results.

Common Sitemap Issues

- Incorrect URL in robots.txt or Search Console: The sitemap URL might be misspelled, outdated, or incorrectly submitted, causing crawlers to fail to locate it.

- File moved or deleted: If the sitemap file was deleted or relocated without updating references, it may result in a 404 error.

- Server issues (404, 500 errors): Server misconfigurations or temporary outages can return HTTP errors that block sitemap access.

- Incorrect file permissions: If the server blocks access due to restrictive file permissions, search engines won’t be able to retrieve the sitemap.

🔧Troubleshooting Steps:

Steps to Fix Sitemap Issues

- Verify sitemap URL in browser: Enter the sitemap URL in your browser to confirm it loads without errors.

- Check robots.txt for Sitemap: directive: Make sure the robots.txt file includes the correct and complete sitemap URL.

- Inspect server logs for errors: Review server logs to identify any 404 or 500 errors when bots attempt to fetch the sitemap.

- Ensure file permissions allow access: Check that the sitemap file is readable by external bots and not restricted by server settings.

- Resubmit sitemap in Search Console: Use Google Search Console to resubmit the sitemap so Google can re-crawl it with the correct path

Sitemap Submitted, But Zero URLs Discovered/Indexed

Problem Description: Sometimes a sitemap is submitted successfully in Google Search Console, but no URLs are discovered or indexed. This means that while the file was found, search engines couldn’t extract any valid, indexable pages from it often due to errors in the sitemap structure or restrictions on the linked pages.

🔎Possible Causes:

- Empty sitemap: If the sitemap file doesn’t actually list any URLs, search engines won’t have anything to crawl.

- Incorrect XML formatting (syntax errors): XML syntax errors, like missing tags or invalid characters, can prevent Google from reading the file properly.

- Sitemap pointing to non-existent URLs (404s): If the sitemap includes broken or outdated URLs, crawlers may disregard the file entirely.

- All URLs blocked by robots.txt or noindex tags: If every listed URL is blocked from crawling or indexing, Google won’t add them to its index.

- Canonicalization issues within the sitemap: If each URL in the sitemap points to a canonical version that differs from itself, Google may skip indexing those pages.

🔧Troubleshooting Steps:

- Open sitemap in browser to check content: Load the sitemap in your browser and make sure it contains a list of valid, accessible URLs.

- Use a sitemap validator tool: Use an online XML validator to check for formatting or syntax issues in the sitemap structure.

- Crawl URLs in sitemap to check for 404s: Run the listed URLs through a site crawler like Screaming Frog to identify broken links.

- Check robots.txt for disallow rules impacting sitemap URLs: Ensure none of the sitemap URLs are being blocked from crawling.

- Verify noindex meta tags on pages: Confirm that the pages listed are not marked as noindex, which would prevent them from appearing in search.

💡 Don’t hesitate to get in touch with our trusted SEO agency North York for expert guidance and professional SEO services. Our team is ready to help you improve your website’s performance and achieve the best online results.

- Ensure rel=”canonical” is correctly used: Double-check that canonical tags on the pages point to the same URL listed in the sitemap.

Sitemap Contains Incorrect/Outdated URLs

Problem Description: When a sitemap includes broken, outdated, or inconsistent URLs, it can confuse search engines and lead to wasted crawl budget, indexing errors, or missed ranking opportunities. This often happens on sites with frequent updates or poor sitemap maintenance.

🔎Possible Causes:

- Sitemap not being updated regularly: If your site changes frequently but the sitemap remains static, it may continue referencing outdated or deleted URLs.

- Improper sitemap generation (e.g., static sitemap for dynamic site): Using a manually created or outdated static sitemap on a site with dynamic content can lead to discrepancies.

- Duplicate content issues (e.g., HTTP vs. HTTPS, www vs. non-www): If multiple versions of the same URL are included, search engines may split ranking signals or index the wrong one.

🔧Troubleshooting Steps:

- Automate sitemap generation/updates (plugins, scripts): Use CMS plugins or scheduled scripts to generate updated sitemaps as your site content changes.

- Implement proper 301 redirects for old URLs: Redirect outdated URLs to their correct counterparts to maintain link equity and avoid broken links in the sitemap.

- Ensure consistency in URL structure (HTTPS, www/non-www): Stick to one preferred version of your domain and ensure the sitemap reflects it consistently.

- Only include canonical URLs: Make sure all URLs listed in the sitemap match the canonical version declared on each page to avoid duplication or confusion.

Sitemap Size Exceeds Limits

Problem Description: Search engines place limits on the size of sitemap files—both in terms of file size (50MB uncompressed) and the number of URLs (50,000 per file). When your sitemap exceeds these limits, Google may ignore parts of it or fail to process it altogether.

🔎Possible Causes:

- Very large website: Sites with tens of thousands of pages—such as large e-commerce platforms or media archives—can easily surpass sitemap size constraints.

🔧Troubleshooting Steps:

- Use sitemap index files to break down into smaller sitemaps: Organize multiple sitemap files under a single index file (sitemap index), which allows better scalability and crawlability.

- Exclude less important or duplicate content from the sitemap: Focus your sitemap on high-priority, indexable pages and remove thin, duplicate, or low-value content.

📖 Learn the essentials of building an effective sitemap with our step-by-step guide. Check out how to create sitemap and start improving your website’s crawlability today.

URLs in Sitemap Are Blocked by Robots.txt

Problem Description: When URLs listed in your sitemap are blocked by rules in your robots.txt file, search engines cannot crawl those pages despite them being submitted for indexing. This inconsistency can reduce your site’s crawl efficiency and lead to indexing problems.

🔎Possible Causes:

- Discrepancy between sitemap and robots.txt strategy: The sitemap may include URLs that are disallowed in robots.txt, creating conflicting instructions for search engines.

🔧Troubleshooting Steps:

- Audit robots.txt and sitemap concurrently: Review both files together to identify URLs that are blocked but still listed in the sitemap.

- Remove disallowed URLs from the sitemap: Ensure your sitemap only contains URLs that are allowed to be crawled.

- Decide if pages should be indexed (remove disallow) or not (remove from sitemap): Choose whether to allow crawling by removing disallow rules or exclude pages from the sitemap to prevent confusion.

URLs in Sitemap Have noindex Tags

Problem Description: When URLs listed in your sitemap contain noindex meta tags, search engines are instructed not to index those pages, which contradicts the purpose of including them in the sitemap. This inconsistency can confuse crawlers and waste crawl budget.

🔎Possible Causes:

- Pages were intended not to be indexed but remained in the sitemap: Sometimes pages with noindex directives are mistakenly left in the sitemap, signaling conflicting indexing instructions.

🔧Troubleshooting Steps:

- Identify pages with noindex tags: Use SEO tools or manual checks to find pages that include noindex meta tags.

- Remove these pages from the sitemap: Exclude pages marked as noindex to avoid sending mixed signals to search engines.

- If you want them indexed, remove the noindex tag: Ensure the meta tag aligns with your indexing goals by removing noindex if indexing is desired.

XML Parsing Errors

Problem Description: XML parsing errors occur when the sitemap file contains syntax mistakes or invalid characters that prevent search engines from reading and processing the sitemap properly. This can lead to incomplete indexing or rejection of the sitemap.

🔎Possible Causes:

- Missing tags, unescaped characters, incorrect encoding: Errors like omitted closing tags, unescaped special characters (e.g., & or “), or wrong file encoding cause parsing failures.

- Manual edits introducing errors: Editing sitemap files by hand without proper XML knowledge often introduces syntax issues.

🔧Troubleshooting Steps:

- Use a reliable sitemap generator: Automatically generate sitemaps using trusted tools to minimize human errors.

- Validate sitemap using online XML validators or Search Console’s sitemap report: Regularly check your sitemap for errors with validation tools or reports in Google Search Console.

- Check for special characters (ampersands, quotes) that need escaping: Ensure characters like & are properly escaped as & to maintain XML validity.

Sitemap Not Being Crawled Frequently Enough

Problem Description: If website sitemaps aren’t crawled regularly, search engines may not promptly discover new or updated content, leading to delays in indexing and reduced visibility.

🔎Possible Causes:

- Low crawl budget (though sitemaps help with this): Sites with limited crawl budgets may experience slower sitemap crawling despite sitemap submissions.

- lastmod not being updated: If the lastmod date in the sitemap is outdated or missing, search engines may not prioritize crawling those URLs.

- General site health issues: Technical problems like slow server response or frequent errors can reduce crawl frequency.

🔧Troubleshooting Steps:

- Ensure lastmod is accurately reflecting the last modification date: Keep the lastmod tag up to date to signal fresh content to crawlers.

- Focus on overall site performance and health: Improve site speed, fix errors, and maintain a clean site structure to encourage regular crawling.

- Regularly ping search engines (though Search Console submission is usually sufficient): Submit sitemaps via Search Console and use ping tools if needed to notify search engines of updates.

💡A skilled SEO team understands all the common sitemap errors and knows how to address them effectively to improve your site’s indexing and rankings. For expert support, trust the SEO service in Toronto that keeps your website running smoothly and search-engine friendly.

Sitemap Issues & How to Resolve Them

follow this:

Incorrect URLs in Sitemap

Including URLs that are blocked by robots.txt, marked as noindex, or redirecting (e.g., 301/302) can hinder search engine crawling and indexing. Ensure your sitemap only contains URLs that are accessible and intended for indexing.

source: Common XML sitemap errors

Sitemap Size Limitations

A single sitemap file should not exceed 50MB (uncompressed) or contain more than 50,000 URLs. If your site exceeds these limits, consider creating multiple sitemap files and referencing them in a sitemap index file. source: Build and submit a sitemap

Sitemap Accessibility Issues

Ensure your sitemap is accessible to search engines. If Google Search Console reports a “Couldn’t Fetch” error, verify that your sitemap URL is correct, not blocked by robots.txt, and accessible without authentication. source: How to fix sitemap errors: Common issues & best practices

Outdated or Missing Sitemaps

Regularly update your sitemap to reflect changes in your website’s content. An outdated sitemap can lead to missing or incorrect pages being indexed. source: XML Sitemap

Incorrect Sitemap Format

Ensure your sitemap adheres to the XML sitemap protocol. Common errors include incorrect tag nesting, missing required tags (, , ), and improper date formats.

Conclusion

Sitemap issues can significantly impact how search engines crawl and index your website, affecting your overall SEO performance. Identifying common problems like inaccessible sitemaps, outdated URLs, or XML errors and addressing them promptly ensures better site visibility and smoother indexing. Regular monitoring and maintenance are key to avoiding sitemap-related SEO pitfalls.

FAQ

What is a sitemap and why is it important

A sitemap is an XML file that lists all the important pages on your website. It helps search engines like Google crawl and index your content more efficiently, ensuring your pages appear in search results.

Why is my sitemap not being read by Google

Common reasons include incorrect URL, server errors (404, 500), blocked access via robots.txt, or improper sitemap formatting. Validating your sitemap and checking accessibility usually resolves the issue.

How many URLs can I include in a sitemap?

A single sitemap file should contain no more than 50,000 URLs and not exceed 50MB uncompressed. For larger sites, you should split URLs into multiple sitemap files and use a sitemap index file.

What does the “Couldn’t Fetch” error mean in Google Search Console?

This error occurs when Google cannot access your sitemap. Causes may include server downtime, blocked URLs, authentication restrictions, or an incorrect sitemap URL.

Can a sitemap contain URLs with no index or redirects?

No, it’s recommended to include only indexable URLs. URLs with noindex, 301/302 redirects, or blocked by robots.txt should be removed to avoid confusion for search engines.

Related Posts

Learn what SEO is and why it's crucial for your business. Find out how optimizing your website can boost…

by

Having a great website is just the first step. To get people to visit it, they need to be…

by

As voice search becomes more popular, it's clear that people are changing how they look for information online. Users…

by

With the rise of zero-click searches, ranking at the top of Google's search results has taken on a new…

by